Reflections inspired by Water & Music’s latest research and webinar

The conversation around artificial intelligence in the music industry is no longer about whether it will affect the ecosystem, it’s about how, and more importantly, who gets credited and compensated in this new paradigm. Water & Music’s recent report and webinar, written by Cherie Hu, Alexander Flores, and Yung Spielburg, offers a nuanced and technical deep-dive into one of the most pressing issues facing the industry: attribution in music AI. It is also one of the discussions we have regularly here at Flower of Sound.

So what is Attribution?

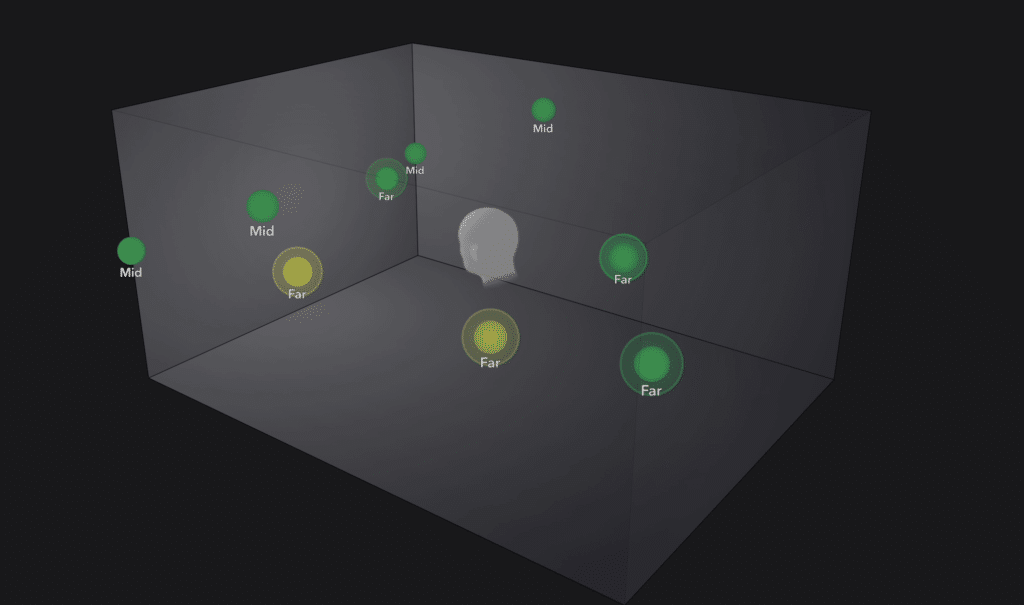

Attribution systems aim to establish causal connections between training data (= the makers) and AI-generated musical output which goes beyond the copyright discussions or deepfake detection that focus mostly on surface-level similarity. Unlike sampling, which lifts direct sonic fragments, music AI learns abstract patterns like chords, timbres, textures, or moods across multiple layers of data. One track might reflect the influence of dozens of sources, which makes tracing its roots exponentially more complex. And most important no one really understands how an output is created and who can claim part of the copyright. It’s basically a black box.

So who decides which musical elements deserve compensation? Is it the chord progression, the sonic texture, the production style? Look also at the current hype with making images into a studio Gibli style. Who gets the credit? And, the more granular the system, the higher the computational load, and the more subjective the decisions. Because even the way prompts are written or data is augmented plays a role in what an AI ultimately produces.

Several startups are tackling this challenge from different angles. Companies like Sureel and Musical AI are building granular, model-level attribution tools, while others such as LANDR and Lemonaide opt for pro-rata, cohort-based distribution of rights. These divergent methods reflect both the technical limitations of current systems and the urgent need for frameworks that feel fair, scalable, and transparent.

Where We Stand as a Startup Working with Sound and AI

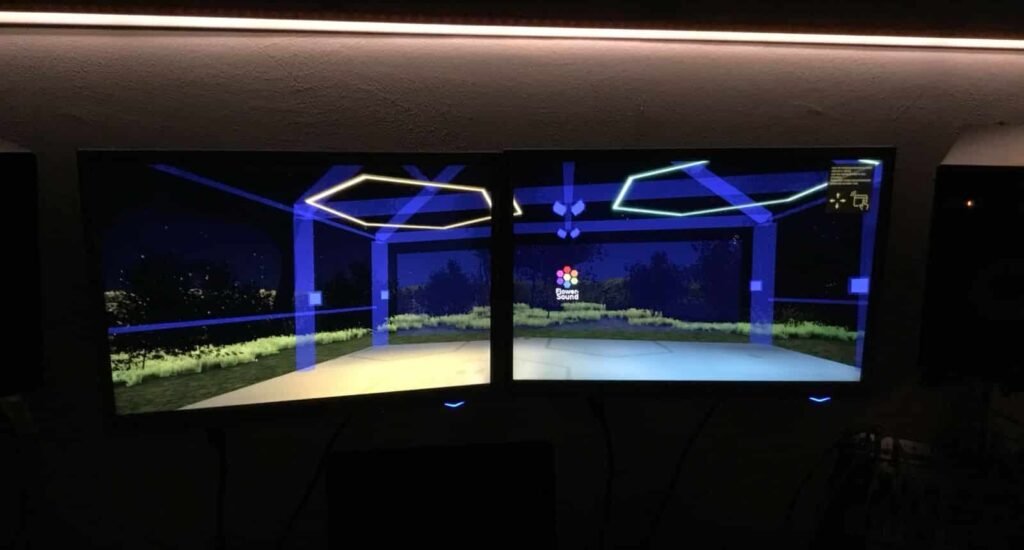

We find ourselves inspired by these conversations and grateful for the clarity and depth Water & Music continues to bring to this evolving space. As a company deeply rooted in well-being, immersive audio, and artistic integrity, we are navigating the AI wave with careful intent.

We constantly ask ourselves: Where can AI help us build a sustainable and ethical business — and where should it not be used?

-

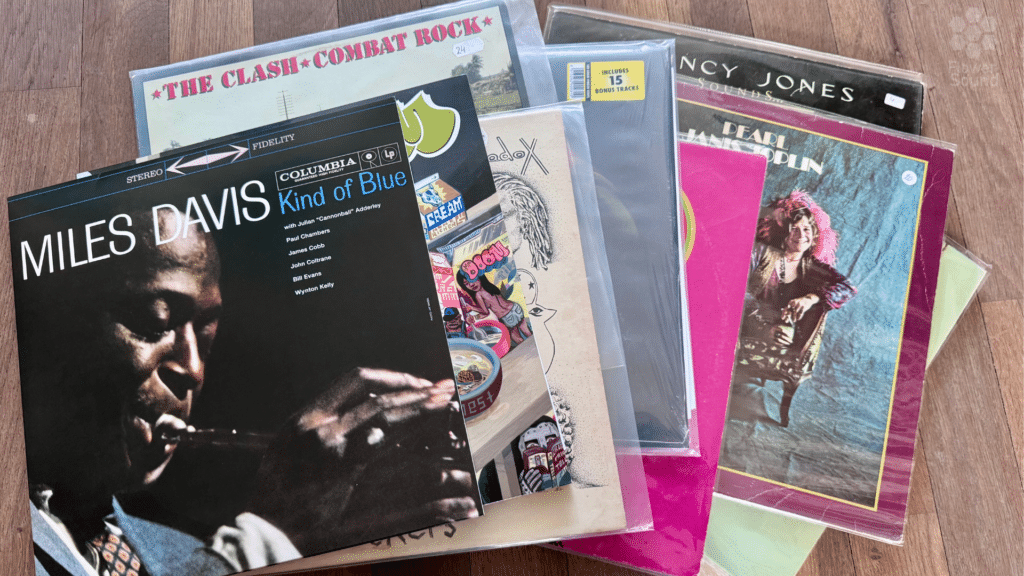

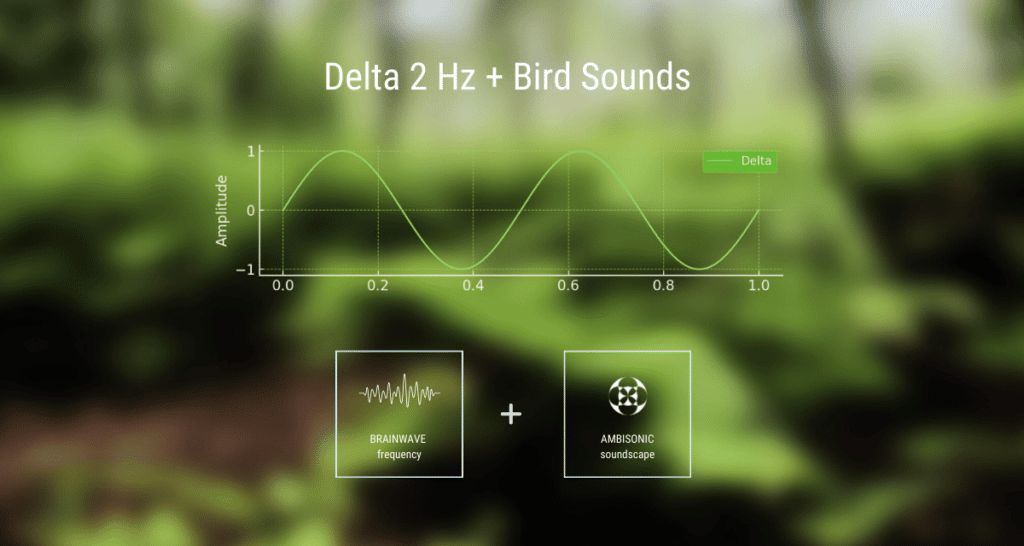

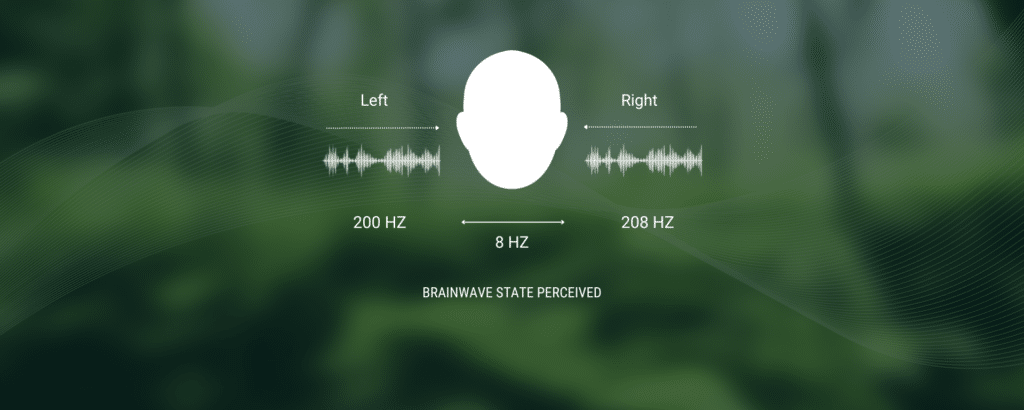

For sound: we’ve chosen, for now, to keep all our audio human-made for humans.

-

For images: we do experiment with AI tools, but we remain 100% transparent about when an image is AI-generated. In fact, 99% of our visuals are made in-house by our team or collaborators.

-

For text: as non-native English speakers, we use AI-based tools to help refine our communication, especially for clarity. But even here, we tread mindfully, always returning to the question: what is a responsible, human-centered use of this technology?

What Water & Music reminds us is that attribution is not just a technical challenge, but a cultural and ethical one not just for music but for all esepcially creative uses. We want to extend our sincere thanks to Cherie Hu, Alexander Flores, and Yung Spielburg for their research.

Image: Logo of Water & Music

Source: Read the AI report here